MAIVRIK

MAIVRIK

Global has so much to offer that we must request you orient your device to portrait or find a larger screen. You won't be disappointed.

MAIVRIK

Individual deep feature layers do not contain adequate information for aerial object detection

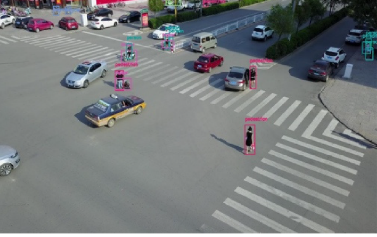

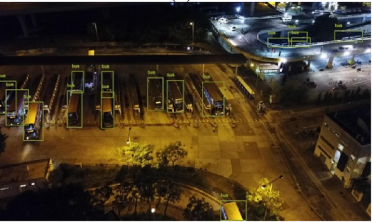

The low-altitude aerial objects themselves contain limited information so contextual data plays a critical role in small-sized based detection.

Insufficient positive examples for low-altitude aerial objects due to an inadequate amount of small-scale anchor boxes generated to match the objects.

The low-altitude aerial objects are hard to detect by existing deep learning-based object detectors because of the scale variance, small size, and occlusion-related problems. Deep learning-based detectors do not consider contextual information about the scale information of small-sized objects in low-altitude aerial images. This paper proposes a new system using the concept of receptive fields and fusion of feature maps to improve the efficiency of deep object detectors in low-altitude aerial images. A Dilated ResNet Module (DRM) is proposed, motivated from the trident networks, which works on dilated convolutions to study the contextual data for specifically small-sized objects. Applicability of this component builds the model strong towards scale variations in low-altitude aerial objects. Then, Feature Fusion Module (FFM) is created to offer semantic intelligence for better detection of low-altitude aerial objects. We have chosen vastly deployed faster RCNN as the base detector for the proposal of our technique. The dilated convolution-based RCNN using feature fusion (DCRFF) system is implemented on a benchmark low-altitude UAV based-object detection dataset, VisDrone, which contains multiple object categories of pedestrians, vehicles in crowded scenes. The experiments exhibit the enactment of the given detector on chosen low-altitude aerial object dataset. The proposed system of DCRFF achieves 35.04% mAP on the challenging VisDrone dataset, indicating an average improvement of 2% when compared.